Apple Is Our Hope for Making Artificial Intelligence More Private

Apple is working on making AI models that can work on devices alone. But we may have to wait a few years for these to work.

There's a price to pay for all the generative AI tools that professionals are using to make themselves more efficient. It's not just a subscription fee to OpenAI, Microsoft Corp. or some other artificial intelligence (AI) company — it's their privacy too. Every interaction with tools like ChatGPT requires a connection to the Internet, and every query is processed on a server (essentially a much more powerful computer than the one you have at home) in a vast data center. Your conversation history? That often gets fed back to the AI model to train it further, along with your personal information. That has rankled some employers worried about data security.

But if the “brain” of an AI tool lived within your own computer instead of routing back to someone else's, the lack of privacy might not be as much of a problem. The great hope for making this happen is Apple Inc.

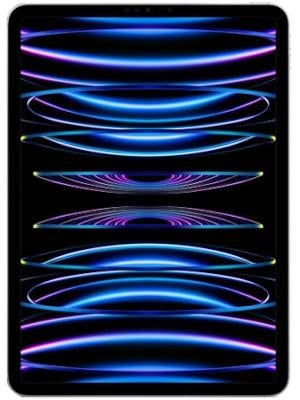

Apple famously isn't first to the party when it comes to new technology. It was slow to enter the smartphone race, and it wasn't the first to bring out a smartwatch — but it now dominates the market for both because it took the time to engineer its way toward the most user-friendly devices. It's the same story with AI. Building the “smartest” generative AI is all about powerful chips, and while Nvidia Corp. dominates that market for cloud-based servers, Apple is well-positioned to be the first to do that successfully on smaller devices, even if it was caught off guard by the generative AI boom. Because although many companies are trying to figure out how to process AI on phones and laptops — without having to connect to a server somewhere — they're hitting technical limitations that may be difficult to surmount without the resources of the $3 trillion hardware company in Cupertino.

We are on WhatsApp Channels. Click to join.

For one thing, Apple has already been designing more powerful chips for its phones and laptops, including the advanced M series chips that provide exceptional processing speeds. The chips that Nvidia builds for processing AI on servers are still far more powerful, but that might be OK if AI models themselves become smaller and more efficient.

That points to another phenomenon driving the on-device trend: While leading AI builders like OpenAI and Alphabet Inc.'s Google are focused on making their models as big as possible, many others are trying to make them smaller and more energy-efficient. Over the last few years, OpenAI found that it could make its large language models sound much more fluent and humanlike if it simply increased the parameters of its models and the amount of computing power that they used, requiring huge cloud servers with powerful chips.

To put that into perspective, OpenAI's GPT-4 was rumored to have more than 1 trillion parameters, while Amazon is reportedly working on a language model called Olympus that has 2 trillion . But Mistral, a French AI startup founded by former research scientists at Google DeepMind and Meta Platforms Inc., is working on language models with a fraction of that number: Its latest open-source language model has a little over 40 billion parameters, but operates just as well as the current free version of ChatGPT (and its underlying model GPT-3.5), according to the company.

Some software developers are capitalizing on Mistral's work to get the ball rolling on more private AI services. Gravity AI, a startup based in San Francisco, for instance, recently launched an AI assistant that works on Apple laptops and needs no internet connection. It's built on Mistral's latest, smaller model and works similarly to ChatGPT.

“You shouldn't have to sacrifice privacy for convenience,” says Tye Daniel, co-founder of Gravity AI. “We'll never sell your data.” Right now Gravity is free, but the startup plans to eventually charge customers for a subscription. As a business model, on-device AI promises better margins than AI services that need to be linked to the cloud. That's because developers have to pay cloud providers like Amazon.com Inc. or Microsoft huge sums each time a customer uses their AI tool — as that requires the processing power of those Big Tech companies' servers.

“I spoke with a founder who is building a similar [AI] tool in the cloud,” Daniel says. “He said, ‘The only way we make money is if people don't use the product.'” That might sound counterintuitive, but even OpenAI's CEO Sam Altman has complained about the painful costs of running ChatGPT for his customers. “We'd love it if they use it less,” he told lawmakers last year. (None of that is a problem for cloud giants like Google and Microsoft, of course, because they not only make AI services but rent out the servers that people need to power them.)

Apple is also working on making AI models smaller, just like the French company Mistral, so that it can run them on its own devices. That's very much in keeping with its walled-garden approach to everything it builds. Last December, its AI researchers announced a breakthrough in running advanced AI tools on iPhones, by streamlining large language models using something called flash storage optimization.

Here's where things get a little technical, and where more private AI might yet remain a pipe dream for many months or even years. Large language models don't just need processing power but also memory to work well — and even on the most robust laptops and phones, these AI tools are hitting a so-called “memory wall.” That's one reason why when I tried running the Gravity AI assistant on my MacBook, it spent a good 30 seconds or more processing some of my queries, and the other apps running on my machine froze up while I was waiting.

All eyes are now on Apple to see if it can solve this technical quandary. AI models aren't just private, but cheaper to run and better for the environment given all the carbon that server farms belch into the air.(1) Apple needs to move quickly if it's going to shrink powerful AI models onto its phones: Smartphone sales are stagnating globally, and its arch-rival Samsung is already finding success in distilling AI models down too. If Apple succeeds, that could kickstart a shift in the AI field that benefits more parties than the one in Cupertino.

(1) The International Energy Agency forecasts that global electricity demand from data centers and AI could more than double over the next three years, adding the equivalent of Germany's entire power needs.

Also, read these top stories today:

Video Surveillance! Police in the US will now have to get warrants to obtain video footage from Amazon Ring devices. But what about the millions of other cameras watching us? Know all about it here.

Rising India! Indian smart electronics companies like Lava and Qubo are getting high ratings. Check it out now here.

iPhone-maker's loss! An Apple veteran who helped start efforts to develop an electric vehicle is leaving for Rivian Auto, marking yet another senior departure for the iPhone maker. Read all about it here.

Catch all the Latest Tech News, Mobile News, Laptop News, Gaming news, Wearables News , How To News, also keep up with us on Whatsapp channel,Twitter, Facebook, Google News, and Instagram. For our latest videos, subscribe to our YouTube channel.